|

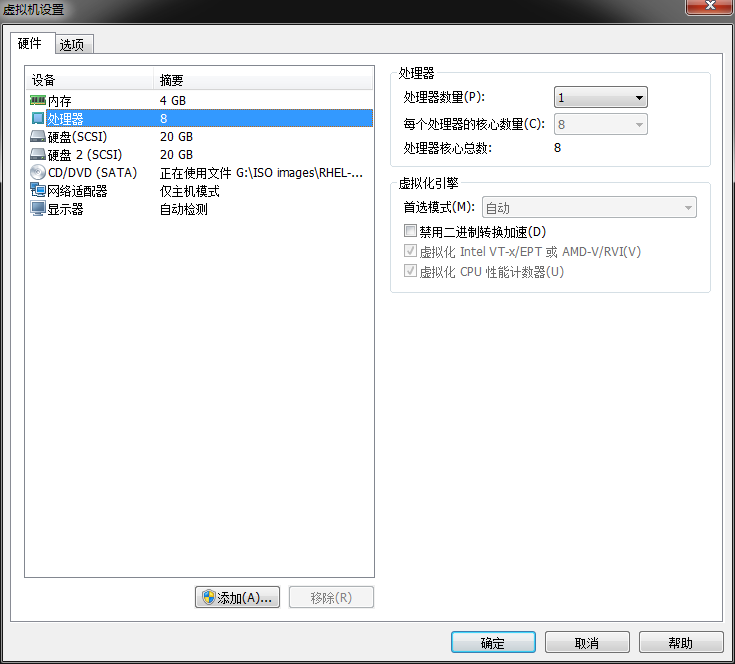

此刻我写这段话的时候,Openstack Liberty版本刚刚发布几周,企业中的生产环境会以稳定性为核心标准,所以还需要较长一段时间才能接受并正式使用这个新版本的产品,为了能够让读者学完即用,本片内容则会以Juno版本来做实验,为了能够让云计算平台发挥到最好的性能,我们需要开启虚拟机的虚拟化功能,内存至少为4GB(推荐8GB以上),并添加额外的一块硬盘(20G以上)。

主机名称 | IP地址/子网 | DNS地址 | openstack.linuxprobe.com | 192.168.10.10/24 | 192.168.10.10 |

设置服务器的主机名称: [root@openstack ~]# vim /etc/hostnameopenstack.linuxprobe.com使用vim编辑器写入主机名(域名)与IP地址的映射文件: [root@openstack ~]# vim /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.10.10 openstack.linuxprobe.com openstack将服务器网卡IP地址配置成"192.168.10.10"后测试主机连通状态: [root@openstack ~]# ping $HOSTNAMEPING openstack.linuxprobe.com (192.168.10.10) 56(84) bytes of data.64 bytes from openstack.linuxprobe.com (192.168.10.10): icmp_seq=1 ttl=64 time=0.099 ms64 bytes from openstack.linuxprobe.com (192.168.10.10): icmp_seq=2 ttl=64 time=0.107 ms64 bytes from openstack.linuxprobe.com (192.168.10.10): icmp_seq=3 ttl=64 time=0.070 ms64 bytes from openstack.linuxprobe.com (192.168.10.10): icmp_seq=4 ttl=64 time=0.075 ms^C--- openstack.linuxprobe.com ping statistics ---4 packets transmitted, 4 received, 0% packet loss, time 3001msrtt min/avg/max/mdev = 0.070/0.087/0.107/0.019 ms创建系统镜像的挂载目录: [root@openstack ~]# mkdir -p /media/cdrom写入镜像与挂载点的信息: [root@openstack ~]# vim /etc/fstab# HEADER: This file was autogenerated at 2016-01-28 00:57:19 +0800# HEADER: by puppet. While it can still be managed manually, it# HEADER: is definitely not recommended.## /etc/fstab# Created by anaconda on Wed Jan 27 15:24:00 2016## Accessible filesystems, by reference, are maintained under '/dev/disk'# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info#/dev/mapper/rhel-root / xfs defaults 1 1UUID=c738dff6-b025-4333-9673-61b10eaf2268 /boot xfs defaults 1 2/dev/mapper/rhel-swap swap swap defaults 0 0/dev/cdrom /media/cdrom iso9660 defaults 0 0挂载系统镜像设备: [root@openstack ~]# mount -amount: /dev/sr0 is write-protected, mounting read-only写入基本的yum仓库配置信息: [root@openstack ~]# vim /etc/yum.repos.d/rhel.repo[base]name=basebaseurl=file:///media/cdromenabled=1gpgcheck=0您可以点此下载EPEL仓库源以及Openstack-juno的软件安装包,并上传至服务器的/media目录中: 软件资源下载地址:https://www.linuxprobe.com/tools/ Openstack Juno——云计算平台软件 Openstack云计算软件能够将诸如计算能力、存储、网络和软件等资源抽象成服务,以便让用户可以通过互联网远程来享用,付费的形式也变得因需而定,拥有极强的虚拟可扩展性。 EPEL——系统的软件源仓库 EPEL是企业版额外的资源包,提供了默认不提供的软件安装包 Cirros——精简的操作系统 Cirros是一款极为精简的操作系统,一般用于灌装到Openstack服务平台中。

[root@openstack ~]# cd /media[root@openstack media]# lscdrom epel.tar.bz2 openstack-juno.tar.bz2分别解压文件: [root@openstack media]# tar xjf epel.tar.bz2[root@openstack media]# tar xjf openstack-juno.tar.bz2分别写入EPEL与openstack的yum仓库源信息: [root@openstack media]# vim /etc/yum.repos.d/openstack.repo[openstack]name=openstackbaseurl=file:///media/openstack-junoenabled=1gpgcheck=0[root@openstack media]# vim /etc/yum.repos.d/epel.repo[epel]name=epelbaseurl=file:///media/EPELenabled=1gpgcheck=0将/dev/sdb创建成逻辑卷,卷组名称为cinder-volumes: [root@openstack media]# pvcreate /dev/sdbPhysical volume "/dev/sdb" successfully created[root@openstack media]# vgcreate cinder-volumes /dev/sdbVolume group "cinder-volumes" successfully created重启系统: [root@openstack media]# reboot安装Openstack的应答文件: [root@openstack ~]# yum install openstack-packstack………………省略部分安装过程………………Installing penstack-packstack noarch 2014.2-0.4.dev1266.g63d9c50.el7.centos openstack 210 kInstalling for dependencies:libyaml x86_64 0.1.4-10.el7 base 55 kopenstack-packstack-puppet noarch 2014.2-0.4.dev1266.g63d9c50.el7.centos openstack 43 kopenstack-puppet-modules noarch 2014.2.1-0.5.el7.centos openstack 1.3 Mperl x86_64 4:5.16.3-283.el7 base 8.0 Mperl-Carp noarch 1.26-244.el7 base 19 kperl-Encode x86_64 2.51-7.el7 base 1.5 Mperl-Exporter noarch 5.68-3.el7 base 28 kperl-File-Path noarch 2.09-2.el7 base 27 kperl-File-Temp noarch 0.23.01-3.el7 base 56 kperl-Filter x86_64 1.49-3.el7 base 76 kperl-Getopt-Long noarch 2.40-2.el7 base 56 kperl-HTTP-Tiny noarch 0.033-3.el7 base 38 kperl-PathTools x86_64 3.40-5.el7 base 83 kperl-Pod-Escapes noarch 1:1.04-283.el7 base 50 kperl-Pod-Perldoc noarch 3.20-4.el7 base 87 kperl-Pod-Simple noarch 1:3.28-4.el7 base 216 kperl-Pod-Usage noarch 1.63-3.el7 base 27 kperl-Scalar-List-Utils x86_64 1.27-248.el7 base 36 kperl-Socket x86_64 2.010-3.el7 base 49 kperl-Storable x86_64 2.45-3.el7 base 77 kperl-Text-ParseWords noarch 3.29-4.el7 base 14 kperl-Time-Local noarch 1.2300-2.el7 base 24 kperl-constant noarch 1.27-2.el7 base 19 kperl-libs x86_64 4:5.16.3-283.el7 base 686 kperl-macros x86_64 4:5.16.3-283.el7 base 42 kperl-parent noarch 1:0.225-244.el7 base 12 kperl-podlators noarch 2.5.1-3.el7 base 112 kperl-threads x86_64 1.87-4.el7 base 49 kperl-threads-shared x86_64 1.43-6.el7 base 39 kpython-netaddr noarch 0.7.12-1.el7.centos openstack 1.3 Mruby x86_64 2.0.0.353-20.el7 base 66 kruby-irb noarch 2.0.0.353-20.el7 base 87 kruby-libs x86_64 2.0.0.353-20.el7 base 2.8 Mrubygem-bigdecimal x86_64 1.2.0-20.el7 base 78 krubygem-io-console x86_64 0.4.2-20.el7 base 49 krubygem-json x86_64 1.7.7-20.el7 base 74 krubygem-psych x86_64 2.0.0-20.el7 base 76 krubygem-rdoc noarch 4.0.0-20.el7 base 317 krubygems noarch 2.0.14-20.el7 base 211 k………………省略部分安装过程………………Complete! penstack-packstack noarch 2014.2-0.4.dev1266.g63d9c50.el7.centos openstack 210 kInstalling for dependencies:libyaml x86_64 0.1.4-10.el7 base 55 kopenstack-packstack-puppet noarch 2014.2-0.4.dev1266.g63d9c50.el7.centos openstack 43 kopenstack-puppet-modules noarch 2014.2.1-0.5.el7.centos openstack 1.3 Mperl x86_64 4:5.16.3-283.el7 base 8.0 Mperl-Carp noarch 1.26-244.el7 base 19 kperl-Encode x86_64 2.51-7.el7 base 1.5 Mperl-Exporter noarch 5.68-3.el7 base 28 kperl-File-Path noarch 2.09-2.el7 base 27 kperl-File-Temp noarch 0.23.01-3.el7 base 56 kperl-Filter x86_64 1.49-3.el7 base 76 kperl-Getopt-Long noarch 2.40-2.el7 base 56 kperl-HTTP-Tiny noarch 0.033-3.el7 base 38 kperl-PathTools x86_64 3.40-5.el7 base 83 kperl-Pod-Escapes noarch 1:1.04-283.el7 base 50 kperl-Pod-Perldoc noarch 3.20-4.el7 base 87 kperl-Pod-Simple noarch 1:3.28-4.el7 base 216 kperl-Pod-Usage noarch 1.63-3.el7 base 27 kperl-Scalar-List-Utils x86_64 1.27-248.el7 base 36 kperl-Socket x86_64 2.010-3.el7 base 49 kperl-Storable x86_64 2.45-3.el7 base 77 kperl-Text-ParseWords noarch 3.29-4.el7 base 14 kperl-Time-Local noarch 1.2300-2.el7 base 24 kperl-constant noarch 1.27-2.el7 base 19 kperl-libs x86_64 4:5.16.3-283.el7 base 686 kperl-macros x86_64 4:5.16.3-283.el7 base 42 kperl-parent noarch 1:0.225-244.el7 base 12 kperl-podlators noarch 2.5.1-3.el7 base 112 kperl-threads x86_64 1.87-4.el7 base 49 kperl-threads-shared x86_64 1.43-6.el7 base 39 kpython-netaddr noarch 0.7.12-1.el7.centos openstack 1.3 Mruby x86_64 2.0.0.353-20.el7 base 66 kruby-irb noarch 2.0.0.353-20.el7 base 87 kruby-libs x86_64 2.0.0.353-20.el7 base 2.8 Mrubygem-bigdecimal x86_64 1.2.0-20.el7 base 78 krubygem-io-console x86_64 0.4.2-20.el7 base 49 krubygem-json x86_64 1.7.7-20.el7 base 74 krubygem-psych x86_64 2.0.0-20.el7 base 76 krubygem-rdoc noarch 4.0.0-20.el7 base 317 krubygems noarch 2.0.14-20.el7 base 211 k………………省略部分安装过程………………Complete!安装openstack服务程序: [root@openstack ~]# packstack --allinone --provision-demo=n --nagios-install=nWelcome to Installer setup utilityPackstack changed given value to required value /root/.ssh/id_rsa.pubInstalling:Clean Up [ DONE ]Setting up ssh keys [ DONE ]Discovering hosts' details [ DONE ]Adding pre install manifest entries [ DONE ]Preparing servers [ DONE ]Adding AMQP manifest entries [ DONE ]Adding MySQL manifest entries [ DONE ]Adding Keystone manifest entries [ DONE ]Adding Glance Keystone manifest entries [ DONE ]Adding Glance manifest entries [ DONE ]Adding Cinder Keystone manifest entries [ DONE ]Adding Cinder manifest entries [ DONE ]Checking if the Cinder server has a cinder-volumes vg[ DONE ]Adding Nova API manifest entries [ DONE ]Adding Nova Keystone manifest entries [ DONE ]Adding Nova Cert manifest entries [ DONE ]Adding Nova Conductor manifest entries [ DONE ]Creating ssh keys for Nova migration [ DONE ]Gathering ssh host keys for Nova migration [ DONE ]Adding Nova Compute manifest entries [ DONE ]Adding Nova Scheduler manifest entries [ DONE ]Adding Nova VNC Proxy manifest entries [ DONE ]Adding Openstack Network-related Nova manifest entries[ DONE ]Adding Nova Common manifest entries [ DONE ]Adding Neutron API manifest entries [ DONE ]Adding Neutron Keystone manifest entries [ DONE ]Adding Neutron L3 manifest entries [ DONE ]Adding Neutron L2 Agent manifest entries [ DONE ]Adding Neutron DHCP Agent manifest entries [ DONE ]Adding Neutron LBaaS Agent manifest entries [ DONE ]Adding Neutron Metering Agent manifest entries [ DONE ]Adding Neutron Metadata Agent manifest entries [ DONE ]Checking if NetworkManager is enabled and running [ DONE ]Adding OpenStack Client manifest entries [ DONE ]Adding Horizon manifest entries [ DONE ]Adding Swift Keystone manifest entries [ DONE ]Adding Swift builder manifest entries [ DONE ]Adding Swift proxy manifest entries [ DONE ]Adding Swift storage manifest entries [ DONE ]Adding Swift common manifest entries [ DONE ]Adding MongoDB manifest entries [ DONE ]Adding Ceilometer manifest entries [ DONE ]Adding Ceilometer Keystone manifest entries [ DONE ]Adding post install manifest entries [ DONE ]Installing Dependencies [ DONE ]Copying Puppet modules and manifests [ DONE ]Applying 192.168.10.10_prescript.pp192.168.10.10_prescript.pp: [ DONE ]Applying 192.168.10.10_amqp.ppApplying 192.168.10.10_mysql.pp192.168.10.10_amqp.pp: [ DONE ]192.168.10.10_mysql.pp: [ DONE ]Applying 192.168.10.10_keystone.ppApplying 192.168.10.10_glance.ppApplying 192.168.10.10_cinder.pp192.168.10.10_keystone.pp: [ DONE ]192.168.10.10_cinder.pp: [ DONE ]192.168.10.10_glance.pp: [ DONE ]Applying 192.168.10.10_api_nova.pp192.168.10.10_api_nova.pp: [ DONE ]Applying 192.168.10.10_nova.pp192.168.10.10_nova.pp: [ DONE ]Applying 192.168.10.10_neutron.pp192.168.10.10_neutron.pp: [ DONE ]Applying 192.168.10.10_neutron_fwaas.ppApplying 192.168.10.10_osclient.ppApplying 192.168.10.10_horizon.pp192.168.10.10_neutron_fwaas.pp: [ DONE ]192.168.10.10_osclient.pp: [ DONE ]192.168.10.10_horizon.pp: [ DONE ]Applying 192.168.10.10_ring_swift.pp192.168.10.10_ring_swift.pp: [ DONE ]Applying 192.168.10.10_swift.pp192.168.10.10_swift.pp: [ DONE ]Applying 192.168.10.10_mongodb.pp192.168.10.10_mongodb.pp: [ DONE ]Applying 192.168.10.10_ceilometer.pp192.168.10.10_ceilometer.pp: [ DONE ]Applying 192.168.10.10_postscript.pp192.168.10.10_postscript.pp: [ DONE ]Applying Puppet manifests [ DONE ]Finalizing [ DONE ]**** Installation completed successfully ******Additional information:* A new answerfile was created in: /root/packstack-answers-20160128-004334.txt* Time synchronization installation was skipped. Please note that unsynchronized time on server instances might be problem for some OpenStack components.* Did not create a cinder volume group, one already existed* File /root/keystonerc_admin has been created on OpenStack client host 192.168.10.10. To use the command line tools you need to source the file.* To access the OpenStack Dashboard browse to http://192.168.10.10/dashboard .Please, find your login credentials stored in the keystonerc_admin in your home directory.* Because of the kernel update the host 192.168.10.10 requires reboot.* The installation log file is available at: /var/tmp/packstack/20160128-004334-tNBVhA/openstack-setup.log* The generated manifests are available at: /var/tmp/packstack/20160128-004334-tNBVhA/manifests创建云平台的网卡配置文件: [root@openstack ~]# vim /etc/sysconfig/network-scripts/ifcfg-br-exDEVICE=br-exIPADDR=192.168.10.10NETMASK=255.255.255.0BOOTPROTO=staticDNS1=192.168.10.1GATEWAY=192.168.10.1BROADCAST=192.168.10.254NM_CONTROLLED=noDEFROUTE=yesIPV4_FAILURE_FATAL=yesIPV6INIT=noONBOOT=yesDEVICETYPE=ovsTYPE="OVSIntPort"OVS_BRIDGE=br-ex修改网卡参数信息为: [root@openstack ~]# vim /etc/sysconfig/network-scripts/ifcfg-eno16777728 DEVICE="eno16777728"ONBOOT=yesTYPE=OVSPortDEVICETYPE=ovsOVS_BRIDGE=br-exNM_CONTROLLED=noIPV6INIT=no将网卡设备添加到OVS网络中: [root@openstack ~]# ovs-vsctl add-port br-ex eno16777728 [root@openstack ~]# ovs-vsctl show55501ff1-856c-46f1-8a00-5c61e48bb64d Bridge br-ex Port br-ex Interface br-ex type: internal Port "eno16777728" Interface "eno16777728" Bridge br-int fail_mode: secure Port br-int Interface br-int type: internal Port patch-tun Interface patch-tun type: patch options: {peer=patch-int} Bridge br-tun Port patch-int Interface patch-int type: patch options: {peer=patch-tun} Port br-tun Interface br-tun type: internal ovs_version: "2.1.3"重启系统让网络设备同步: [root@openstack ~]# reboot执行身份认证脚本:

[cc lang="bash"]

[root@openstack ~]# source keystonerc_admin

[root@openstack ~(keystone_admin)]# openstack-status

== Nova services ==

openstack-nova-api: active

openstack-nova-cert: active

openstack-nova-compute: active

openstack-nova-network: inactive (disabled on boot)

openstack-nova-scheduler: active

openstack-nova-volume: inactive (disabled on boot)

openstack-nova-conductor: active

== Glance services ==

openstack-glance-api: active

openstack-glance-registry: active

== Keystone service ==

openstack-keystone: active

== Horizon service ==

openstack-dashboard: active

== neutron services ==

neutron-server: active

neutron-dhcp-agent: active

neutron-l3-agent: active

neutron-metadata-agent: active

neutron-lbaas-agent: inactive (disabled on boot)

neutron-openvswitch-agent: active

neutron-linuxbridge-agent: inactive (disabled on boot)

neutron-ryu-agent: inactive (disabled on boot)

neutron-nec-agent: inactive (disabled on boot)

neutron-mlnx-agent: inactive (disabled on boot)

== Swift services ==

openstack-swift-proxy: active

openstack-swift-account: active

openstack-swift-container: active

openstack-swift-object: active

== Cinder services ==

openstack-cinder-api: active

openstack-cinder-scheduler: active

openstack-cinder-volume: active

openstack-cinder-backup: active

== Ceilometer services ==

openstack-ceilometer-api: active

openstack-ceilometer-central: active

openstack-ceilometer-compute: active

openstack-ceilometer-collector: active

openstack-ceilometer-alarm-notifier: active

openstack-ceilometer-alarm-evaluator: active

== Support services ==

libvirtd: active

openvswitch: active

dbus: active

tgtd: inactive (disabled on boot)

rabbitmq-server: active

memcached: active

== Keystone users ==

+----------------------------------+------------+---------+----------------------+

| id | name | enabled | email |

+----------------------------------+------------+---------+----------------------+

| 7f1f43a0002e4fb9a04b9b1480294e08 | admin | True | test@test.com |

| c7570a0d3e264f0191d8108359100cdd | ceilometer | True | ceilometer@localhost |

| 9d3d1b46599341638771c33bcebe17fc | cinder | True | cinder@localhost |

| 52a803edcc4e479ea147e69ca2966f46 | glance | True | glance@localhost |

| 8b0bcd19b11f49059bc100d260f39d50 | neutron | True | neutron@localhost |

| 953e01b228ef480db551dd05d43eb6d1 | nova | True | nova@localhost |

| 16ced2f73c034e58a0951e46f22eddc8 | swift | True | swift@localhost |

+----------------------------------+------------+---------+----------------------+

== Glance images ==

+----+------+-------------+------------------+------+--------+

| ID | Name | Disk Format | Container Format | Size | Status |

+----+------+-------------+------------------+------+--------+

+----+------+-------------+------------------+------+--------+

== Nova managed services ==

+----+------------------+--------------------------+----------+---------+-------+----------------------------+-----------------+

| Id | Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+----+------------------+--------------------------+----------+---------+-------+----------------------------+-----------------+

| 1 | nova-consoleauth | openstack.linuxprobe.com | internal | enabled | up | 2016-01-29T04:36:20.000000 | - |

| 2 | nova-scheduler | openstack.linuxprobe.com | internal | enabled | up | 2016-01-29T04:36:20.000000 | - |

| 3 | nova-conductor | openstack.linuxprobe.com | internal | enabled | up | 2016-01-29T04:36:20.000000 | - |

| 4 | nova-compute | openstack.linuxprobe.com | nova | enabled | up | 2016-01-29T04:36:16.000000 | - |

| 5 | nova-cert | openstack.linuxprobe.com | internal | enabled | up | 2016-01-29T04:36:20.000000 | - |

+----+------------------+--------------------------+----------+---------+-------+----------------------------+-----------------+

== Nova networks ==

+----+-------+------+

| ID | Label | Cidr |

+----+-------+------+

+----+-------+------+

== Nova instance flavors ==

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

| ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

| 1 | m1.tiny | 512 | 1 | 0 | | 1 | 1.0 | True |

| 2 | m1.small | 2048 | 20 | 0 | | 1 | 1.0 | True |

| 3 | m1.medium | 4096 | 40 | 0 | | 2 | 1.0 | True |

| 4 | m1.large | 8192 | 80 | 0 | | 4 | 1.0 | True |

| 5 | m1.xlarge | 16384 | 160 | 0 | | 8 | 1.0 | True |

+----+-----------+-----------+------+-----------+------+-------+-------------+-----------+

== Nova instances ==

+----+------+--------+------------+-------------+----------+

| ID | Name | Status | Task State | Power State | Networks |

+----+------+--------+------------+-------------+----------+

+----+------+--------+------------+-------------+----------+

[/cc]

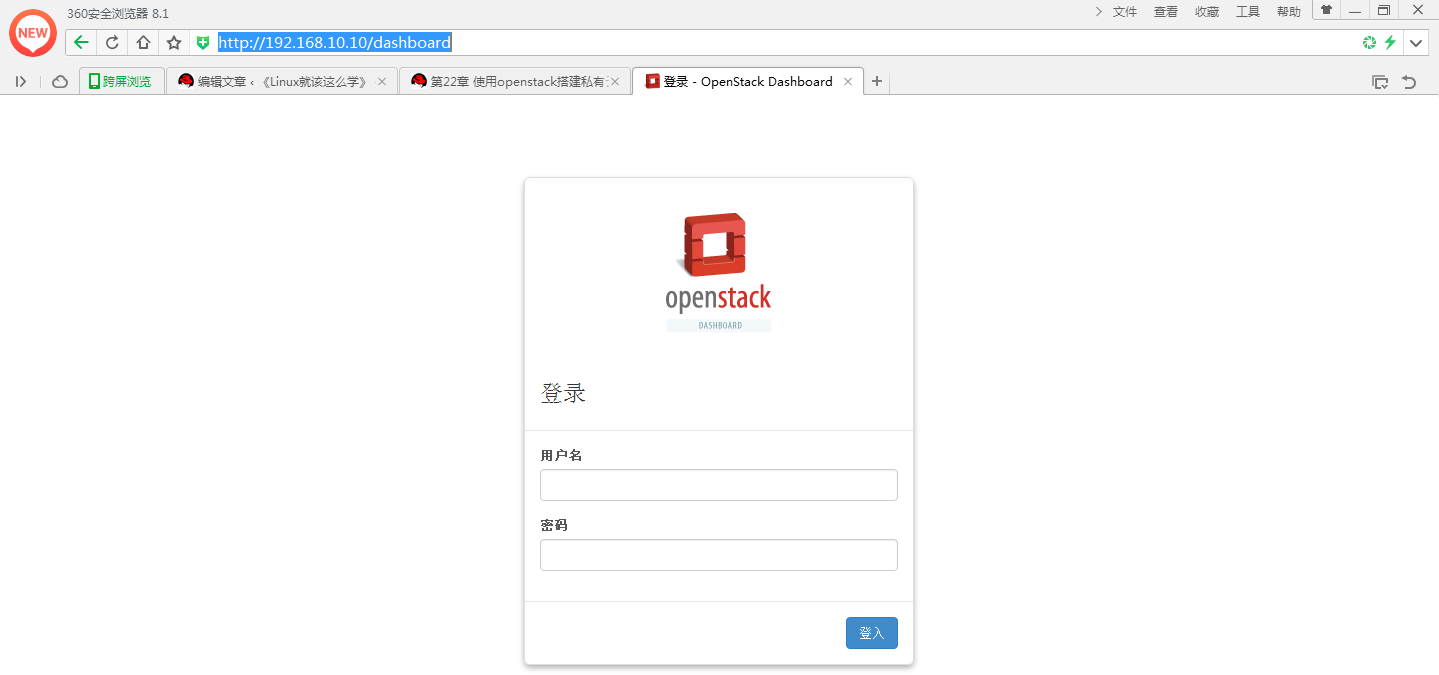

打开浏览器进入http://192.168.10.10/dashboard:

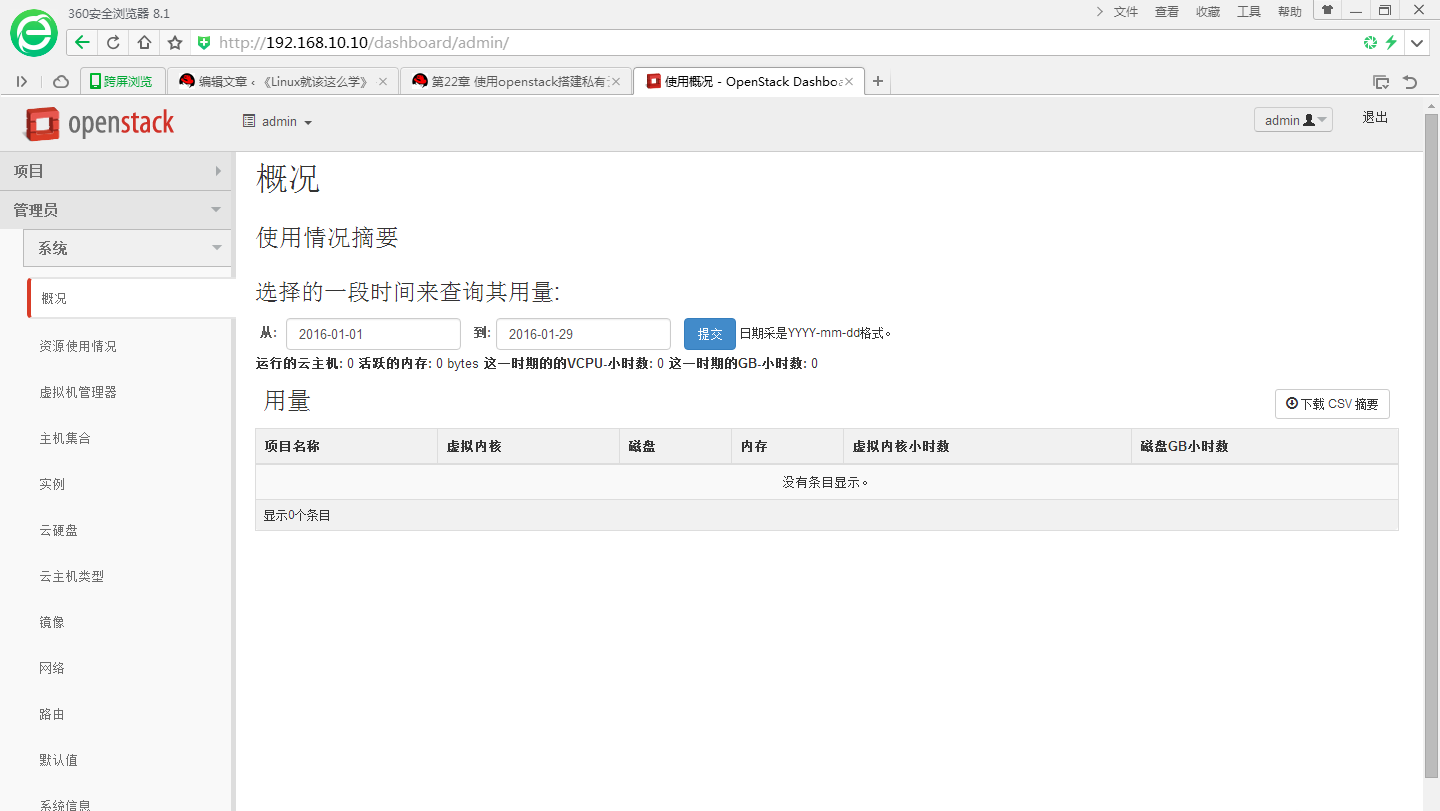

查看登录的帐号密码: [root@openstack ~]# cat keystonerc_admin export OS_USERNAME=adminexport OS_TENANT_NAME=adminexport OS_PASSWORD=14ad1e723132440cexport OS_AUTH_URL=http://192.168.10.10:5000/v2.0/export PS1='[\u@\h \W(keystone_admin)]\$ '输入帐号密码后进入到Openstack管理中心:

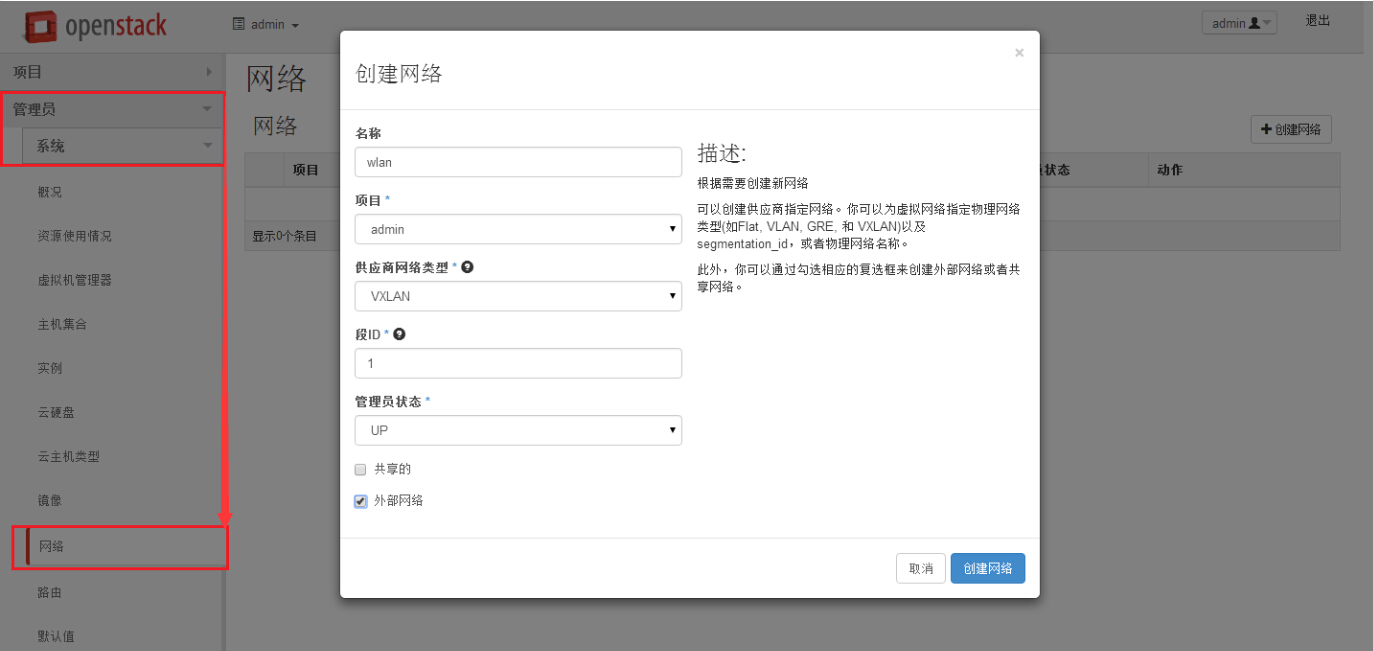

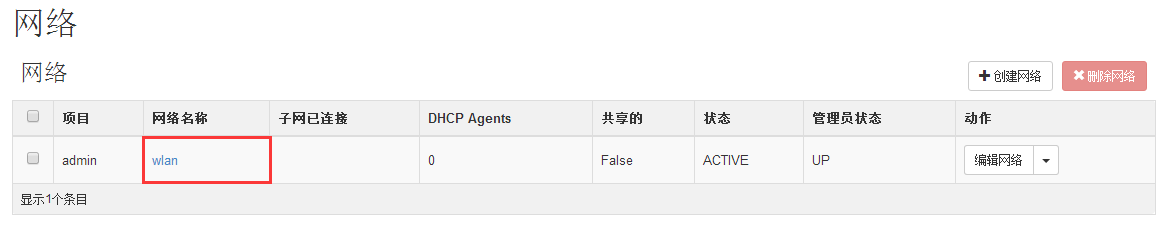

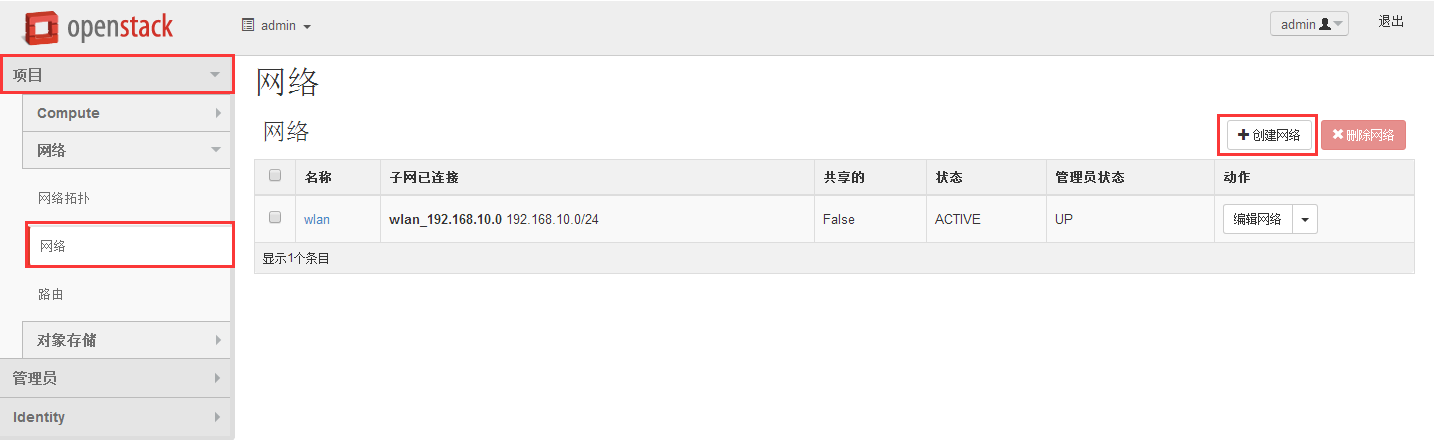

22.5 使用Openstack服务22.5.1 配置虚拟网络要想让云平台中的虚拟实例机能够互相通信,并且让外部的用户访问到里面的数据,我们首先就必需配置好云平台中的网络环境。 Openstack创建网络:

编辑网络配置:

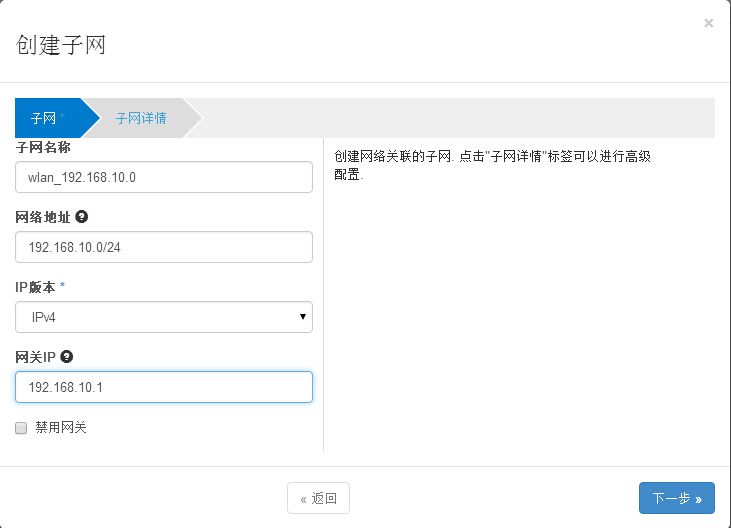

点击创建子网:

创建子网信息:

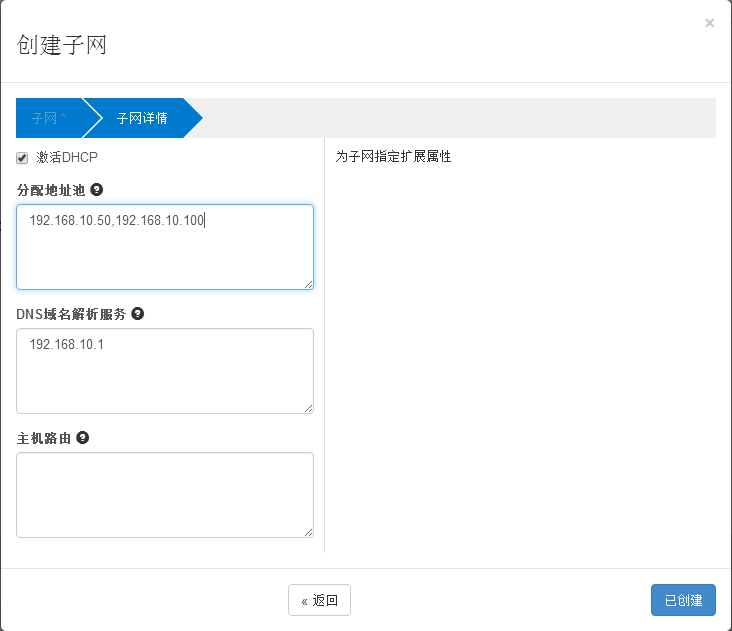

填写子网详情(DHCP地址池中的IP地址用逗号间隔):

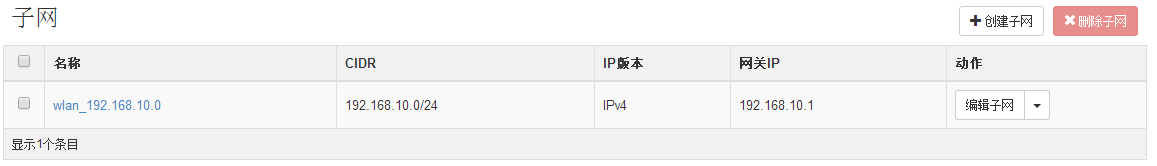

子网详情:

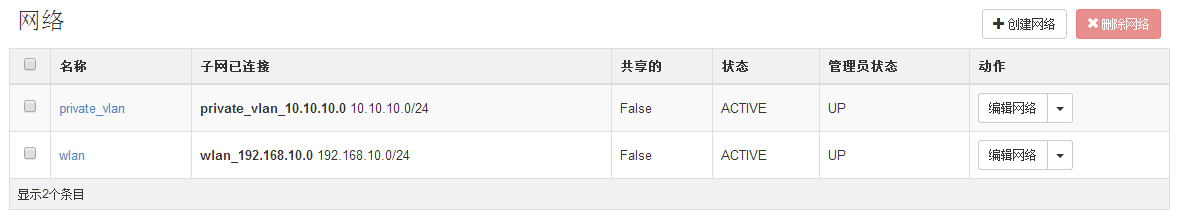

创建私有网络:

创建网络:

填写网络信息:

设置网络详情:

查看网络信息:

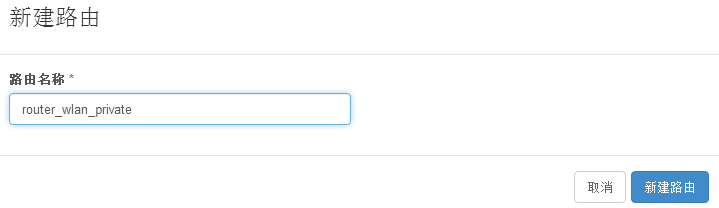

添加路由信息:

填写路由名称:

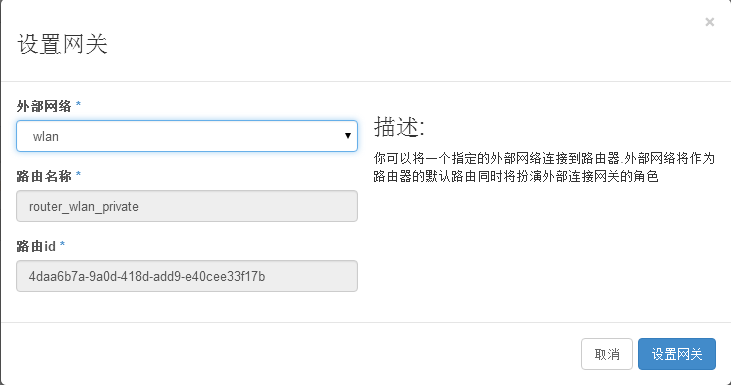

设置路由的网关信息:

设置网关:

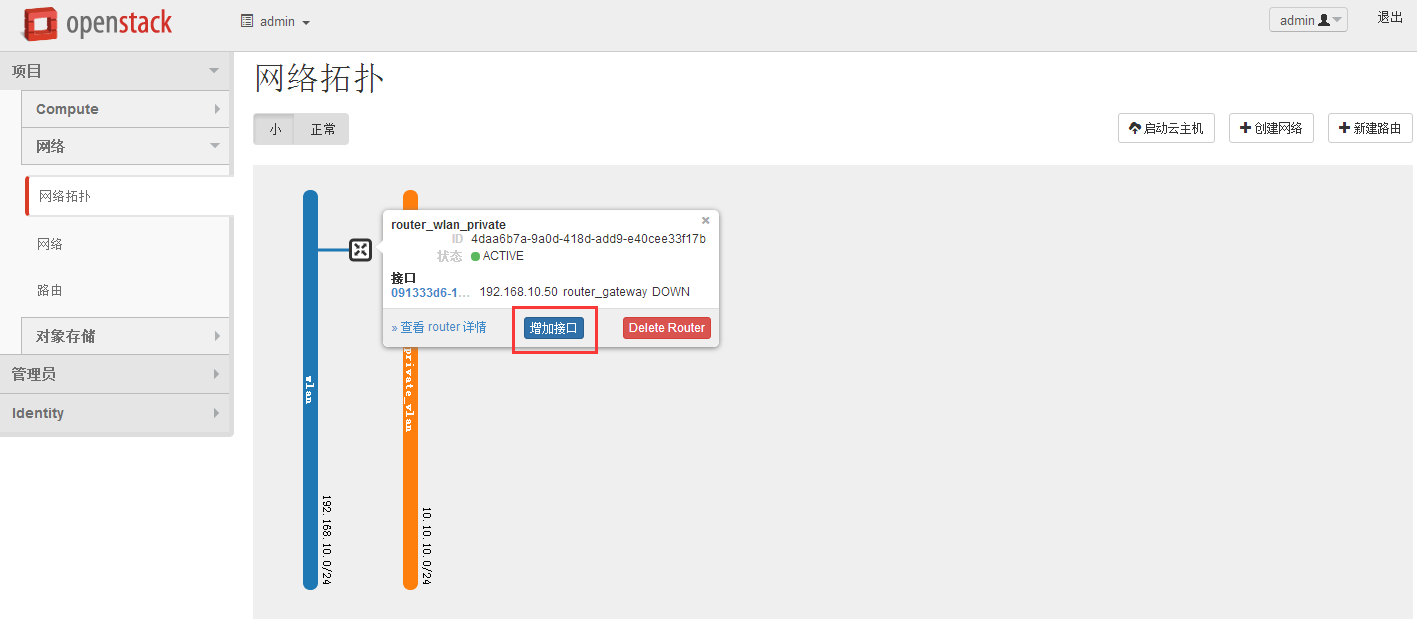

在网络拓扑中添加接口:

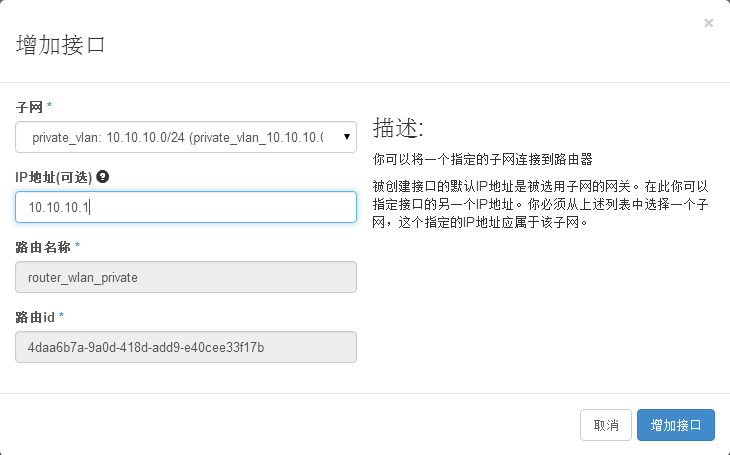

添加接口信息:

路由的接口信息(需要等待几秒钟后,内部接口的状态会变成ACTIVE):

22.5.2 创建云主机类型我们可以预先设置多个云主机类型的模板,这样可以灵活的满足用户的需求,先来创建云主机类型:

填写云主机的基本信息:

创建上传镜像:

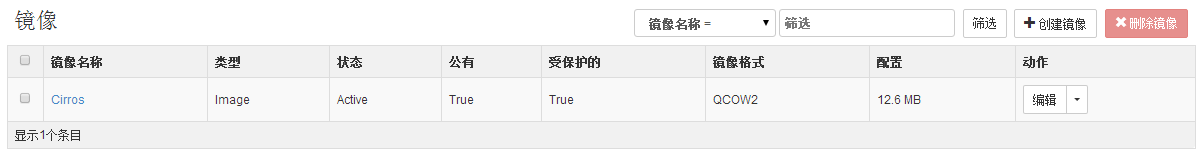

Cirros是一款极为精简的操作系统,非常小巧精简的Linux系统镜像,一般会在搭建Openstack后测试云计算平台可用性的系统,特点是体积小巧,速度极快,那么来上传Cirros系统镜像吧:

查看已上传的镜像(Cirros系统上传速度超级快吧!):

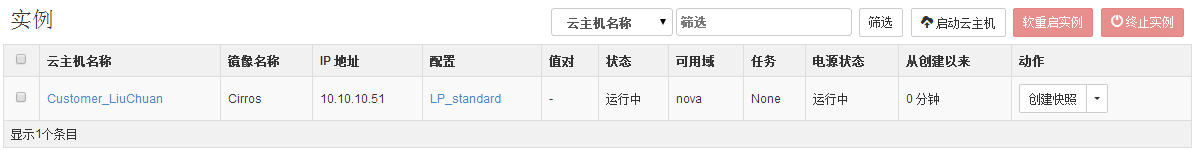

22.5.3 创建主机实例创建云主机实例:

填写云主机的详情(云主机类型可以选择前面自定义创建的):

查看云主机的访问与安全规则:

将私有网络网卡添加到云主机:

查看安装后的脚本数据:

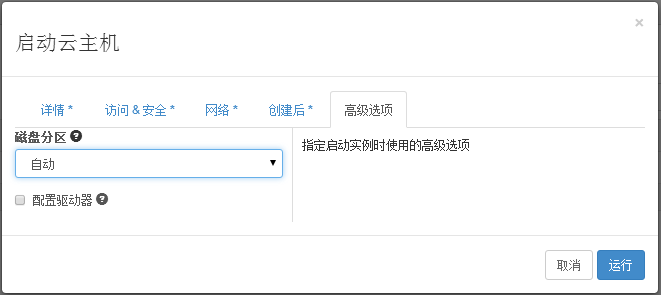

查看磁盘的分区方式:

主机实例的孵化过程大约需要10-30秒,然后查看已经运行的实例:  查看实例主机的网络拓扑(当前仅在内网中): 查看实例主机的网络拓扑(当前仅在内网中):

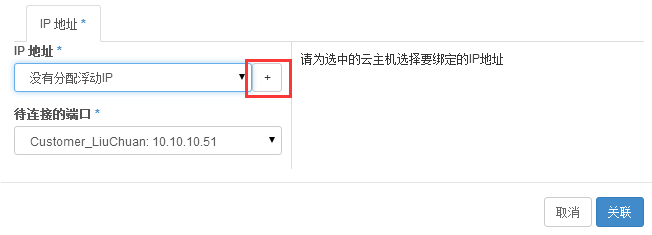

为实例主机绑定浮动IP地址:

为主机实例添加浮动IP

选择绑定的IP地址:

将主机实例与IP地址关联:

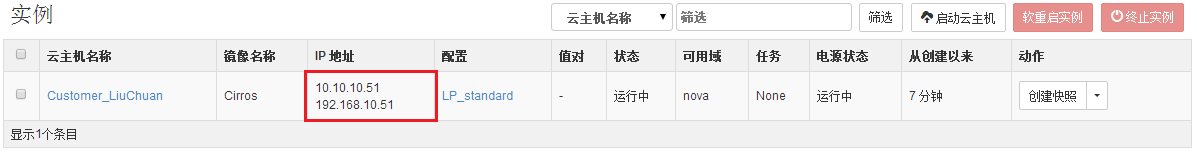

此时再查看实例的信息,IP地址段就多了一个数据值(192.168.10.51):

尝试从外部ping云主机实例(结果是失败的): [root@openstack ~]# ping 192.168.10.51PING 192.168.10.51 (192.168.10.51) 56(84) bytes of data.From 192.168.10.10 icmp_seq=1 Destination Host UnreachableFrom 192.168.10.10 icmp_seq=2 Destination Host UnreachableFrom 192.168.10.10 icmp_seq=3 Destination Host UnreachableFrom 192.168.10.10 icmp_seq=4 Destination Host Unreachable^C--- 192.168.10.51 ping statistics ---6 packets transmitted, 0 received, +4 errors, 100% packet loss, time 5001mspipe 4原因是我们没有设置安全组规则那,需要让外部流量允许进入到主机实例中:

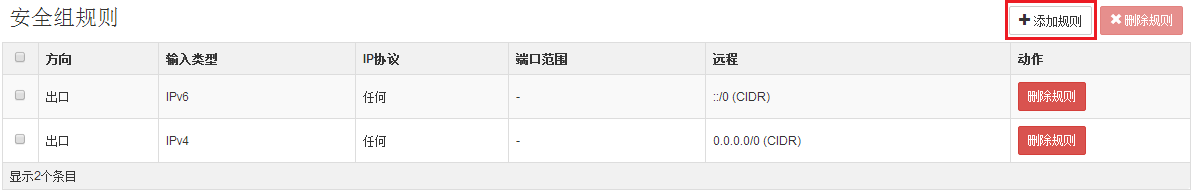

填写策略组的名称与描述:

管理安全组的规则:

添加安全规则:

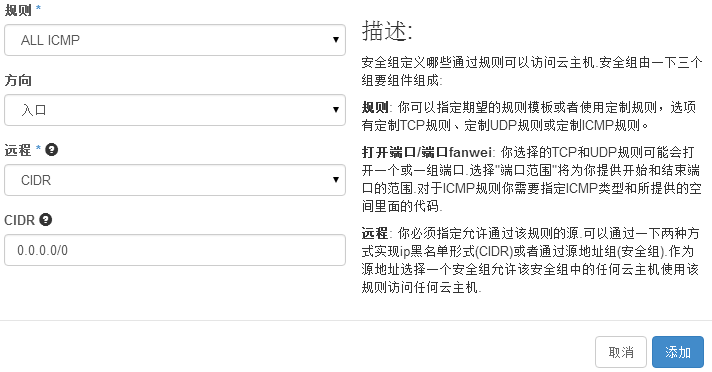

允许所有的ICMP数据包流入(当然根据工作有时还需要选择TCP或UDP协议,此时仅为验证网络连通性):

编辑实例的安全策略组:

将新建的安全组策略作用到主机实例上:

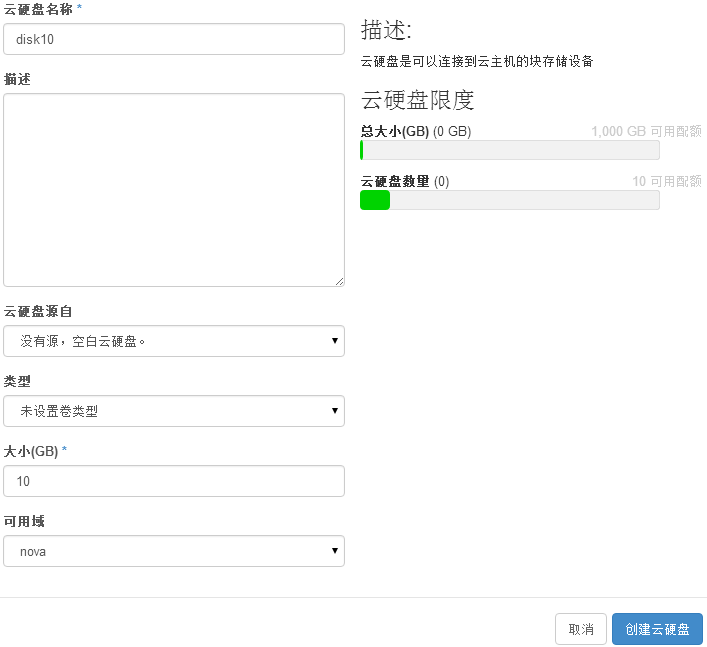

再次尝试从外部ping虚拟实例主机: [root@openstack ~]# ping 192.168.10.51PING 192.168.10.51 (192.168.10.51) 56(84) bytes of data.64 bytes from 192.168.10.51: icmp_seq=1 ttl=63 time=2.47 ms64 bytes from 192.168.10.51: icmp_seq=2 ttl=63 time=0.764 ms64 bytes from 192.168.10.51: icmp_seq=3 ttl=63 time=1.44 ms64 bytes from 192.168.10.51: icmp_seq=4 ttl=63 time=1.30 ms^C--- 192.168.10.51 ping statistics ---4 packets transmitted, 4 received, 0% packet loss, time 3004msrtt min/avg/max/mdev = 0.764/1.497/2.479/0.622 ms22.5.5 添加云硬盘云计算平台的特性就是要能够灵活的,弹性的调整主机实例使用的资源,我们可以来为主机实例多挂载一块云硬盘,首先来创建云硬盘设备:

填写云硬盘的信息(以10GB为例):

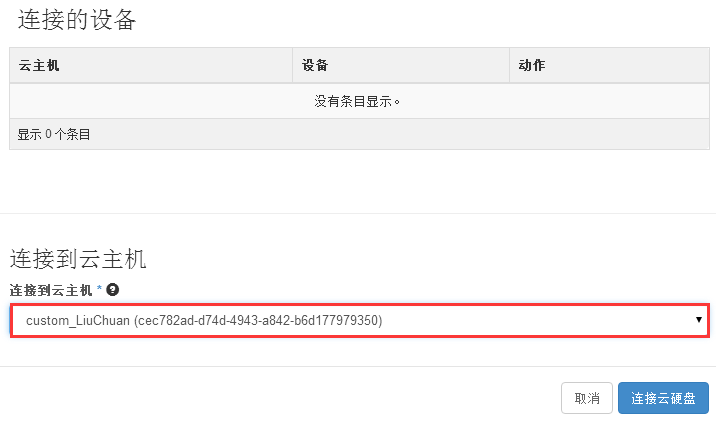

编辑挂载设备到主机云实例:

将云硬盘挂载到主机实例中:

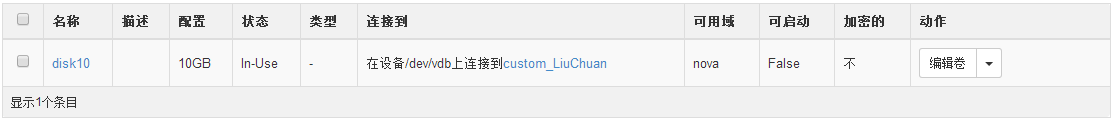

查看云主机实例中的硬盘信息:

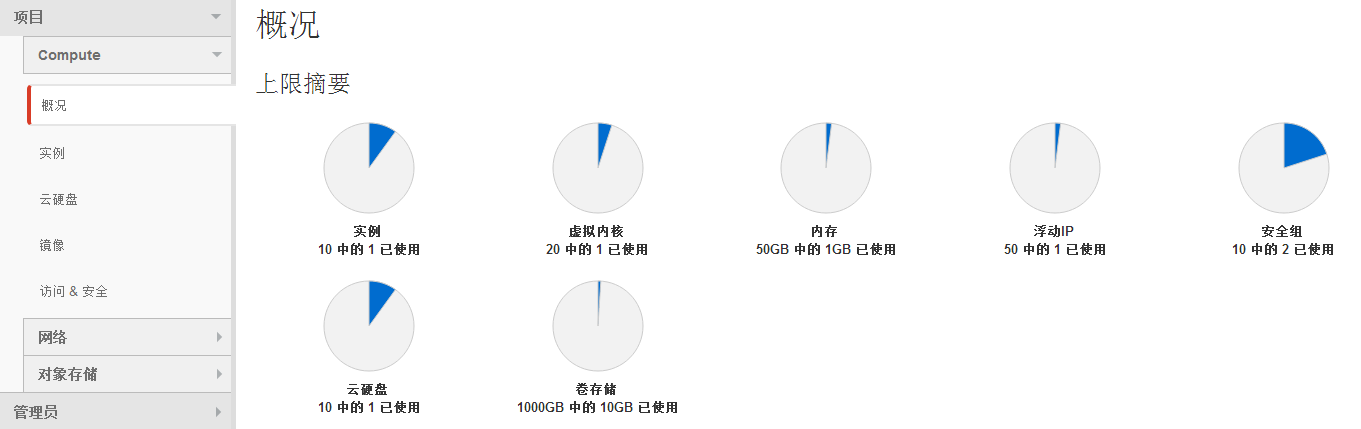

22.6 控制云主机实例经过上面的一系列配置,我们此时已经创建出了一台能够交付给用户使用的云主机实例了,查看下云平台的信息:

编辑安全策略,允许TCP和UDP协议的数据流入到云主机实例中:

分别添加TCP和UDP的允许规则:

成功登录到云主机实例中(默认帐号为"cirros",密码为:"cubswin "): "): [root@openstack ~]# ssh cirros@192.168.10.52The authenticity of host '192.168.10.52 (192.168.10.52)' can't be established.RSA key fingerprint is 12:ef:c7:fb:57:70:fc:60:88:8c:96:13:38:b1:f6:65.Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added '192.168.10.52' (RSA) to the list of known hosts.cirros@192.168.10.52's password: $查看云主机实例的网络情况: $ ip a 1: lo: mtu 16436 qdisc noqueue link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever2: eth0: mtu 1500 qdisc pfifo_fast qlen 1000 link/ether fa:16:3e:4f:1c:97 brd ff:ff:ff:ff:ff:ff inet 10.10.10.51/24 brd 10.10.10.255 scope global eth0 inet6 fe80::f816:3eff:fe4f:1c97/64 scope link valid_lft forever preferred_lft forever挂载刚刚创建的云硬盘设备: $ df -hFilesystem Size Used Available Use% Mounted on/dev 494.3M 0 494.3M 0% /dev/dev/vda1 23.2M 18.0M 4.0M 82% /tmpfs 497.8M 0 497.8M 0% /dev/shmtmpfs 200.0K 68.0K 132.0K 34% /run$ mkdir disk$ sudo mkfs.ext4 /dev/vdbmke2fs 1.42.2 (27-Mar-2012)Filesystem label=OS type: LinuxBlock size=4096 (log=2)Fragment size=4096 (log=2)Stride=0 blocks, Stripe width=0 blocks655360 inodes, 2621440 blocks131072 blocks (5.00%) reserved for the super userFirst data block=0Maximum filesystem blocks=268435456080 block groups32768 blocks per group, 32768 fragments per group8192 inodes per groupSuperblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632Allocating group tables: done Writing inode tables: done Creating journal (32768 blocks): doneWriting superblocks and filesystem accounting information: done $ sudo mount /dev/vdb disk/$ df -hFilesystem Size Used Available Use% Mounted on/dev 494.3M 0 494.3M 0% /dev/dev/vda1 23.2M 18.0M 4.0M 82% /tmpfs 497.8M 0 497.8M 0% /dev/shmtmpfs 200.0K 68.0K 132.0K 34% /run/dev/vdb 9.8G 150.5M 9.2G 2% /home/cirros/disk |  |手机版|灵易深论坛

( 沪ICP备2020036158号-2 )

|手机版|灵易深论坛

( 沪ICP备2020036158号-2 )